Our team continuously monitors the headlines for the latest accounts payable (AP) and security news. We bring you all the essential stories in our cyber brief so your team can stay secure.

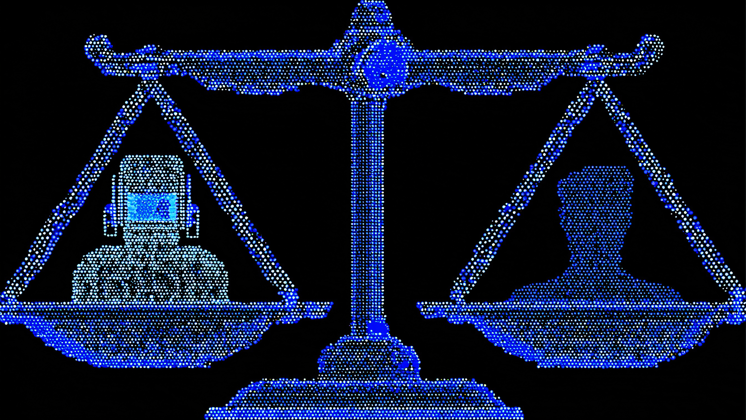

Report: cyber risk programs omit finance teams

A study commissioned by cybersecurity firm Qualys found most companies still treat cybersecurity as an IT issue, giving less weight to finance and other business priorities. Just 22% of organisations involve finance teams in risk discussions, while fewer than one-third align security programs with business objectives.

Qualys VP Mayuresh Ektare warned that programs detached from operational, financial, and regulatory stakes are ineffective. Experts stress that CFOs must partner with CISOs to quantify risks, guide investment, and communicate cybersecurity risks in financial terms. Not only do we think CFOs should be included in security discussions, we think CFOs are best-placed to drive an overall anti-cybercrime strategy and know how to distinguish it from cybersecurity measures. See our full strategic guide.

Digital natives more likely to be deceived by AI deepfakes

New research indicates that the people who are most confident in spotting AI scams — particularly Gen Z — actually tend to be the most vulnerable, with 30% successfully phished compared to 12% of baby boomers.

Despite a 62% overall rise in reported scam victims, fear of AI fraud has fallen by 18% year-on-year. Scammers now use AI to craft personalised messages, clone voices, and mimic brands or trusted contacts with alarming accuracy.

Report: generative AI fuelling million-dollar fraud losses

A recent report claims that one in four enterprises lost over $1 million in a single fraud attack last year, as generative AI accelerates large-scale sophisticated scams.

The report warns that generative AI has turned fraud into coordinated, cross-system business attacks that exploit gaps between teams and tools — and we know from the Qualys study that there are serious gaps between IT and finance. The findings support that insight, suggesting that finance–security misalignment worsens fraud risk and that only 27% of organisations share fraud prevention ownership.

Deepfake scams surge with executive impersonation attacks

The alarm bell has been ringing for years, but evidence continues to mount: AI-generated deepfakes are taking social engineering scams to another level. Deepfake-related cyberattacks in the US exceeded 105,000 last year — roughly one every five minutes — marking a sharp rise from 2023, according to Adaptive Security CEO Brian Long.

Once rare, these scams are becoming more prevalent. Criminals target employees with privileged access, posing as CEOs or senior officials in convincing video calls to pressure them into wiring funds or sharing sensitive data. High-profile examples of such fraud tactics have made headlines since at least last year, but finance leaders and security specialists are confirming that these scams are becoming more prevalent.

WA resident almost loses $250k in scam

Albany police are investigating after a Western Australian resident lost nearly $250,000 in a phone scam in which malicious actors posed as staff from the Australian Cyber Security Centre.

Over months, the target was coerced through encrypted apps, remote access software, and even an in-person meeting with a local contact. The case highlights a trend of scammers combining digital and physical tactics to build trust. Authorities warn that recovery is difficult and social engineering is becoming more difficult to detect.

Gold Coast couple lose $250k in targeted home deposit scam

A Gold Coast couple lost $250,000 in a spear phishing scam that hijacked their house deposit during settlement. Scammers impersonated the couple’s conveyancer with near-perfect emails, altering only the address suffix, and tricked them into transferring funds to a fraudulent account. Police have linked the account to a Melbourne student, with investigations now led by Victoria Police.

ANZ recovered $82,000 of the stolen funds, but it’s important to note that high-value, personalised scams are replacing low-value, broad phishing attempts. Especially with AI making personalisation research and tactics more sophisticated and low-cost, scammers no longer need to fire off a few suspicious-looking emails in the hopes that some recipients aren’t paying attention or are easily deceived. They can identify potential targets in minutes and leverage AI tools like large language models (LLMs) to hone their social engineering attempts. Synthetic media such as deepfakes and voice conversion will only make these impersonation attempts harder to detect over time.